- Posted By Dr. Anuranjan Bist

- Comments 0

Digital Mental Health Apps And The Growing Role of AI in Mental Health

If you’ve ever opened an app at 1:17 a.m. looking for calm – breathing, a grounding exercise, a gentle voice, you’re not alone. Digital mental health apps have quietly become the “first door” many people try before they talk to a professional. And now, with AI in mental health showing up everywhere (chatbots, symptom checkers, “personalized” care plans), that door is turning into a whole hallway of options.

The big question is: how much of this is genuinely helpful, and what should you be careful about? Let’s walk through what the science actually says, where digital mental health apps shine, where they fall short, and how AI in mental health changes the story, sometimes for the better, sometimes not.

What are digital mental health apps, really?

When people say digital mental health apps, they usually mean one (or more) of these:

- Self-guided tools (mood trackers, journaling, CBT exercises)

- Guided programs (structured courses for anxiety, depression, sleep)

- Human-supported therapy platforms (teletherapy + messaging)

- Crisis and support tools (safety plans, helplines, community resources)

- “AI companion” tools (chat-based support, coaching-style prompts)

Here’s the key: digital mental health apps are not one category. The experience you get from a mindfulness app is very different from a clinician-supervised digital therapeutic. That’s why blanket statements like “apps work” or “apps don’t work” usually miss the truth.

And now, AI in mental health is being layered on top, sometimes as a chatbot, sometimes as personalization (nudges, reminders, content selection), sometimes as decision support.

How is AI in mental health changing psychological care?

Let’s make AI in mental health simple: it’s software that detects patterns and generates responses, often using large datasets and (in many modern tools) generative AI. In real-world products, it can do things like:

- Suggest coping strategies based on what you type

- Personalize a daily routine (sleep, stress, mindfulness, CBT modules)

- Flag risk patterns (“You’ve been reporting low mood for 10 days”)

- Help clinicians summarize data from digital mental health apps (trends over time)

The promise sounds great: faster access, more personalization, lower cost. The caution is equally real: AI in mental health can misunderstand context, miss nuance, or give overly confident guidance when it shouldn’t. The World Health Organization has repeatedly emphasized the need for strong governance and responsible use of AI in healthcare, including newer guidance that addresses large multimodal / generative AI models.

So yes, AI in mental health can expand access, but it also raises new safety, privacy, and reliability questions.

What does science say about digital mental health apps?

Here’s the balanced truth most people don’t get on social media: digital mental health apps tend to show small but real improvements for depression and anxiety in research, especially when they include evidence-based methods like CBT and mood monitoring.

A large body of research suggests that digital mental health apps produce small but statistically significant improvements in symptoms of depression and generalized anxiety. Studies also indicate that apps incorporating evidence based features, particularly cognitive behavioral therapy interventions and mood monitoring, tend to be associated with better outcomes than non structured or purely wellness focused tools.

That lines up with what clinicians see: digital mental health apps can help you build skills, but skills require repetition. An app can teach you a tool. Only you can practice it consistently enough for it to change your nervous system’s default settings.

To make it practical, here’s a quick “what works best” snapshot:

Type of tool | Best for | What evidence tends to support |

CBT-based programs | Mild–moderate anxiety/depression skills | Stronger results vs generic content |

Mood tracking + reflection | Awareness, pattern detection | Helpful when paired with action steps |

Guided sleep / stress tools | Short-term regulation | Good for routine + consistency |

Therapy platforms | Complex, persistent symptoms | Stronger when clinician-led |

This is why “mental health apps effectiveness” is not a yes/no question. The real question is: Which app? For which problem? With what level of support?

Can AI in mental health improve access and personalization?

This is where AI in mental health genuinely has potential.

If you live in a place with limited providers, if your schedule is chaotic, if you’re uncomfortable starting therapy right away – digital mental health apps can act as a bridge. AI can make that bridge feel more personal: reminders that match your routine, content that adapts, and check-ins that feel responsive.

This is the upside of AI-powered mental health therapy: not “AI replacing therapy,” but AI helping people start sooner and stick with helpful routines longer.

But you should treat that personalization like you’d treat “recommended videos” on social media: helpful sometimes, biased sometimes, and not a substitute for professional judgment.

Where do digital mental health apps fall short?

Let’s say it plainly: digital mental health apps can’t fully hold complexity.

They struggle when:

- Symptoms are severe (suicidal thoughts, self-harm urges, psychosis)

- There’s significant trauma that needs skilled pacing

- Relationships, identity, grief, or deep patterns require human attunement

- You need accountability that goes beyond reminders

And engagement is a real issue. Many people download digital mental health apps, feel hopeful for a few days, then slowly stop. That’s not a character flaw, it’s how motivation works. Without support, it’s hard to turn a tool into a habit.

This is also where digital therapy for mental health becomes more effective when it’s blended: app + clinician, or app + structured program + check-ins.

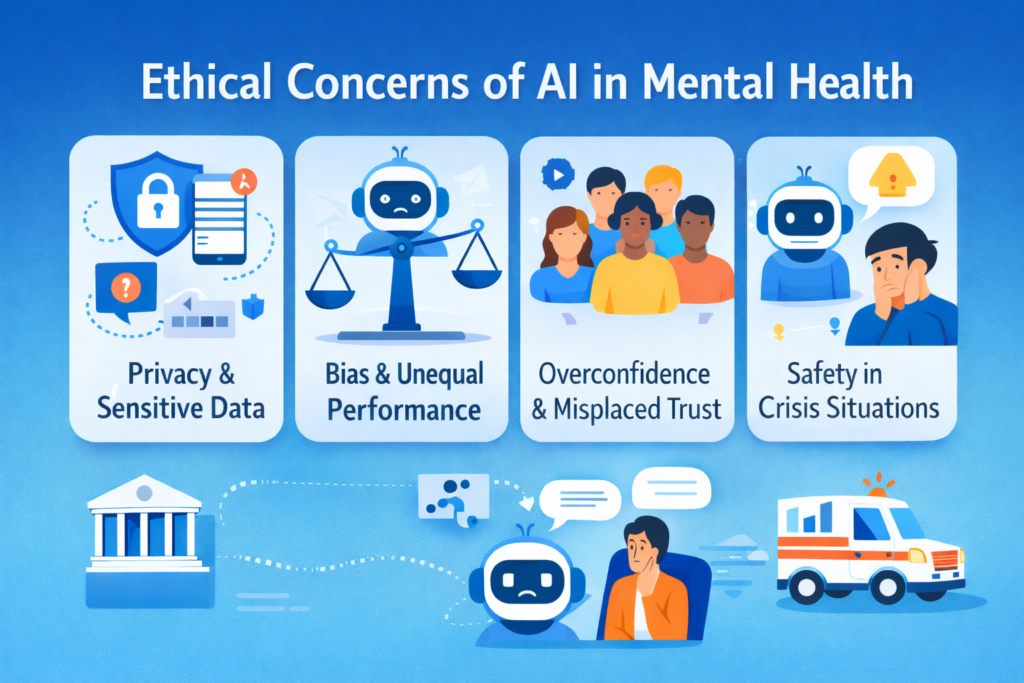

What are the ethical concerns of AI in mental health?

This section matters because it’s where hope can turn into harm if we’re careless.

The phrase ethical concerns of AI in mental health isn’t abstract. It usually means:

Privacy and sensitive data: Your mood, sleep, triggers, relationships, this is deeply personal information. WHO’s ethics guidance highlights privacy, data protection, and governance as core requirements for AI in health.

Bias and unequal performance: If an AI model was trained on non-representative data, it may misunderstand language, culture, or symptom expression.

Overconfidence and misplaced trust: A chatbot can sound calm and certain, even when it’s wrong. That confidence can be misleading, especially for vulnerable users.

Safety in crisis situations: Some health systems have explicitly warned against using general-purpose chatbots for therapy or crisis support, emphasizing they cannot replace trained professionals in emergencies. (If you’re in immediate danger, use local emergency services.)

Also worth knowing – regulators are actively discussing how to evaluate safety and evidence for AI-enabled, patient-facing digital mental health tools. The U.S. FDA has been addressing evolving regulatory considerations for digital mental health medical devices, including generative-AI-related risks.

Should digital mental health apps replace traditional therapy?

In most cases, the answer is no, and this is not a limitation of technology. Digital mental health apps are tools, not replacements for professional care. In many ways, they are similar to fitness apps. A workout app can support better health habits, but if you experience chest pain, tracking it is not enough. You seek medical attention.

The same principle applies to mental health. Digital mental health apps can be helpful when you are looking to build skills, create structure, and receive consistent daily support. When symptoms become persistent, severe, or complex, the most effective approach is to combine digital mental health apps with professional care. And in moments of crisis, relying on AI in mental health tools is not appropriate. Immediately, human support is essential.

How can you choose digital mental health apps safely?

Here’s a checklist you can actually use before you commit your time:

- Does it use evidence-based methods?

- Does it explain what it can and cannot do?

- Does it have clear privacy policies and data controls?

- Does it encourage professional help when needed?

- If it uses AI in mental health, does it explain how AI is used and what safeguards exist?

And one more practical point: if you’re using digital mental health apps for anxiety or depression and you’re not seeing progress after a few weeks, don’t assume “nothing works.” It may mean you need a different level of care, not more willpower.

Where Mind Brain Institute fits in this conversation

At the Mind Brain Institute, we often see a similar pattern. Many people begin their mental health journey with digital mental health apps. These tools can offer initial relief, but when symptoms continue or become more complex, people often realise they need deeper and more structured support.

This is where evidence based clinical care plays an important role. At the institute, a holistic approach is used, with therapies tailored to each person’s situation, needs, and clinical profile. Care plans are designed thoughtfully, combining different methods when required, and always guided by professional evaluation.

The idea is simple. Digital mental health apps can be part of your mental health journey, but they do not have to be the whole journey.